Mendelson AS2 is one of the widely used AS2 clients, and is also the unofficial AS2 testing tool that we use here at AdroitLogic (besides OpenAS2 etc.).

While Mendelson does offer quite a lucrative handful of features, we needed more flexibility in order to integrate it into our testing cycles—especially when it comes to programmatic test automation of our AS2Gateway.

A spark of hope

If you have a curious eye, you might already have glimpsed the following on the log window of the Mendelson UI, right after it is fired up:

[8:30:42 AM] Client connected to localhost/127.0.0.1:1235 [8:30:44 AM] Logged in as user "admin"

So there's probably a server-client distinction among Mendelson's numerous components; a server that handles AS2 communication, and a client that authenticates to it and provides the necessary instructions.

The fact is confirmed by the docs.

What if...

So what if we can manipulate the client component of Mendelson AS2, and use it to programmatically perform AS2 operations: like sending and checking received messages under different, programmatically configured partner and local station configurations?

Guess what? That's totally possible.

Mendelson comes bundled with a wide range of Java clients, in addition to the GUI client that you see everyday. Different ones are available for different tasks, such as configuration, general commands, file transfers, etc. It's just a matter of picking and choosing the matching set of clients and request/response pairs, and wiring them together to compose the flow you want.

Which could turn out to be harder than you think, due to the lack of decent client documentation (at least for the stuff I searched for).

Digging for the gold

Fortunately the source is available online, so you could just download and extract it, plug it into an IDE like IntelliJ or Eclipse, and start hunting for classes with suspicious names, e.g. those having "client", "request" or "message" in their class or package names. If your IDE supports class decompilation, you could also simply add the main AS2 JAR (<Mendelson installation root>/as2.jar) to your project's build path (although I cannot guarantee the legality of such a move!)

Well, my understandings may not be perfect, but this is what my findings revealed regarding tapping into Mendelson's AS2 client ecosystem:

- You start by creating a

de.mendelson.util.clientserver.BaseClientderivative of the required type, providing either a host-port-user-password combination for a server (which we already have, when running the UI; usually configurable at<Mendelson installation root>/passwd), or another pre-initializedBaseClientinstance. - You compose a request entity, picking one out of the wide range of request-response classes deriving from

de.mendelson.util.clientserver.messages.ClientServerMessage(yup, I too wished the base class were<something>Request; looks a bit clumsy, but gotta live with it—at least the actual concrete class name ends with "Request"!). - Now you submit the request entity to one of the sender methods of your client (such as

sendSync()), and get hold of the response, anotherClientServerMessageinstance (with a name ending with, you guessed it, "Response"). - You now consult the response entity to see if the operation succeeded (e.g.

response.getException() != null) and to retrieve what you were looking for, in case it was a query.

While it sounds simple, some operations such as sending messages and browsing through old messages requires a bit of insight into how the gears interlock.

Your first move

Let's start by creating a client for sending our commands to the server:

/*

"NoOpClientSessionHandlerCallback" is a bare-bones implementation of

de.mendelson.util.clientserver.ClientSessionHandlerCallback;

you could also use one of the existing implementations, like "AnonymousTextClient"

*/

BaseClient client = new BaseClient(new NoOpClientSessionHandlerCallback(logger));

if (!client.connect(new InetSocketAddress(host, port), 1000) ||

client.login("admin", "admin".toCharArray(), AS2ServerVersion.getFullProductName())

.getState() != LoginState.STATE_AUTHENTICATION_SUCCESS) {

throw new IllegalStateException("Login failed");

}

// done!My partners!

For most of the operations, you need to possess in advance, Partner entities representing the list of configured partners (and local stations; by the way, I wish if it were possible to treat local stations as separate entities, for the sake of distinguishing their role, similar to how AS2 Gateway does it):

PartnerListRequest listReq = new PartnerListRequest(PartnerListRequest.LIST_ALL); /* you can optionally receive a filtered result based on the partner ID: listReq.setAdditionalListOptionStr(AS2GX); */ // cast() is my tiny utility method for casting the response to the appropriate type (2nd argument) List<Partner> partners = cast(client.sendSync(listReq), PartnerListResponse.class).getList(); /* now you can filter the "partners" list to retrieve the interested partner and local station; let's call them "partnerEntity" and "stationEntity" */

Sending stuff out

For a send, you first have to individually upload each outbound attachment via a de.mendelson.util.clientserver.clients.datatransfer.TransferClient, accumulating the returned "hashes", and finally submit a de.mendelson.comm.as2.client.manualsend.ManualSendRequest containing the hashes along with the recipient and other information. (If you hadn't noticed, this client-based approach inherently allows you to send multiple attachments in a single message, which is not facilitated via the GUI :) )

// "files" is a String array containing paths of files for upload

// create a new file transfer client, wrapping our existing "client"

TransferClient tc = new TransferClient(client);

ManualSendRequest sendReq = new ManualSendRequest();

sendReq.setSender(stationEntity);

sendReq.setReceiver(partnerEntity);

List<String> hashes = new ArrayList<>();

List<String> fileNames = sendReq.getFilenames();

// upload each file separately

for (String file : files) {

try (InputStream in = new FileInputStream(file)) {

fileNames.add(Paths.get(file).getFileName().toString());

// upload as chunks, set returned hash as payload identifier

hashes.add(tc.uploadChunked(in));

}

}

sendReq.setUploadHashs(hashes);

// submit actual message for sending

Throwable e = client.sendSync(sendReq).getException();

if (e != null) {

throw e;

}

// done!Delving into the history

Message history retrieval is fairly granular, with separate requests for list, detail and attachment queries. A de.mendelson.comm.as2.message.clientserver.MessageOverviewRequest gives you the list of messages matching some filter criteria, whose message IDs can then be used in de.mendelson.comm.as2.message.clientserver.MessageDetailRequests in order to retrieve further AS2-level details of the message.

To retrieve a list of messages:

// retrieve messages received from "sender" on local station "receiver"

MessageOverviewFilter filter = new MessageOverviewFilter();

filter.setShowPartner(sender);

filter.setShowLocalStation(receiver);

List<AS2MessageInfo> msgs = cast(c.sendSync(new MessageOverviewRequest(filter)),

MessageOverviewResponse.class).getList();To retrieve an individual message, just send a MessageOverviewRequest with the message ID instead of a filter:

// although it returns a list, it should theoretically contain a single message matching "as2MsgId"

AS2MessageInfo msg = cast(client.sendSync(new MessageOverviewRequest(as2MsgId)),

MessageOverviewResponse.class).getList().get(0);If you want the actual content (attachments) delivered in a message, just send a de.mendelson.comm.as2.message.clientserver.MessagePayloadRequest with the message ID; but ensure that you invoke loadDataFromPayloadFile() on each retrieved payload entity, before you attempt to read its content via getData().

for (AS2Payload payload : cast(client.sendSync(new MessagePayloadRequest(msg.getMessageId())),

MessagePayloadResponse.class).getList()) {

// WARNING: this loads the payload into memory!

payload.loadDataFromPayloadFile();

byte[] content = payload.getData();

}In closing

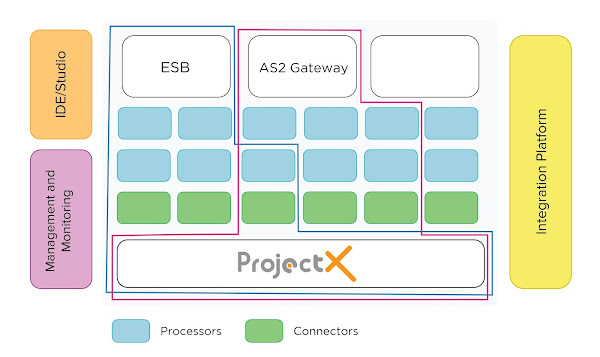

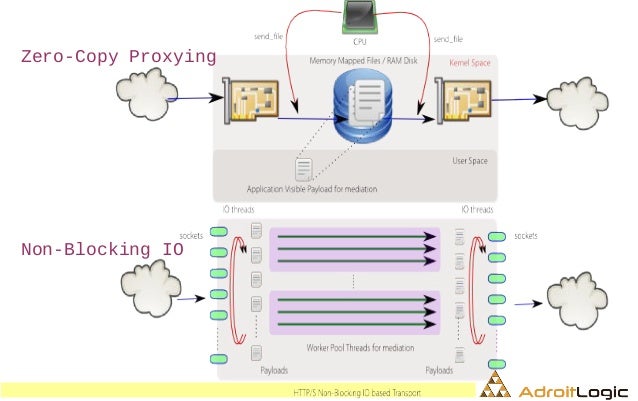

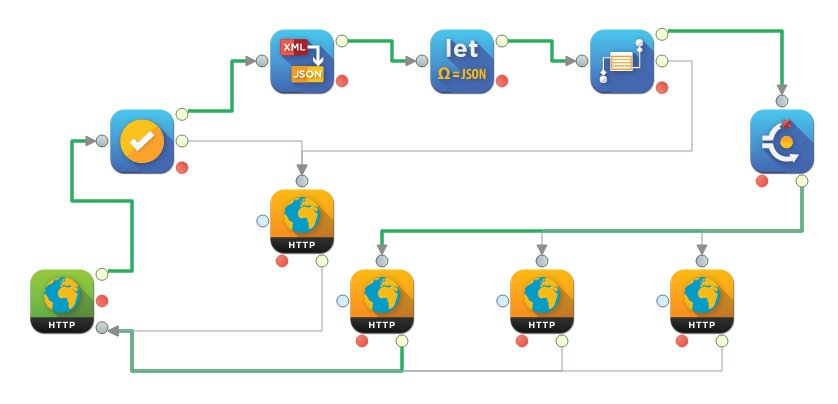

I hope the above would help you get started in your quest for Nirvana with Mendelson AS2; cheers! And don't forget to check out our new and improved AS2Gateway, which is fully compatible with Mendelson AS2 (or any other AS2 broker, for that matter)!