In early 2018, Google Cloud Functions went GA. Some time before that - in March 2018, while it was still in beta - I took some screenshots while taking my first shot at Cloud Functions. But then one thing led to another, and to another, and another, and this blog post was never born.

Now, after a whole year of downright obsoletion, I present to you my "getting started with Google Cloud Functions [beta]" guide.

Surely a lot has changed; beta tag gone, hordes of new features introduced: Python runtime, environment variables, in-built test invocations and logs, and a lot that I haven't even seen yet.

Activate billing on your GCP account

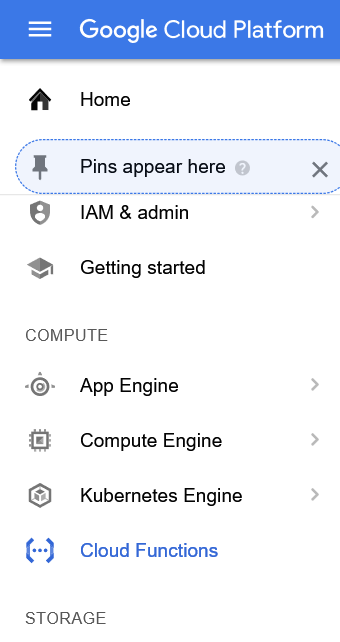

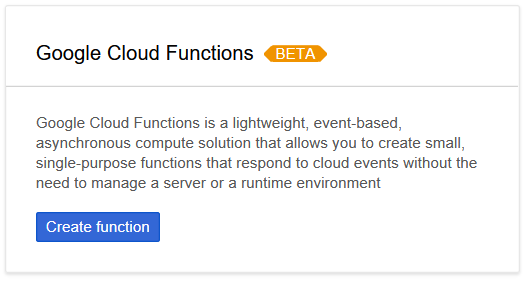

Now, if you rush to the Cloud Functions dashboard, you may notice that you need to enable the Cloud Functions API - unless you have done so already, with your currently active GCP project.

Cloud Functions need a billing enabled GCP project, so that's the first thing we need to do. Google's official guide is fairly easy to follow.

Google promises that we won't be charged during our 1-year, $300 free trial so we're covered here.

Create a cloud function

When done, go ahead to the Cloud Functions dashboard and click Create function.

This will take you to a Create function wizard. The first phase would resemble:

Here you define most of the basics of your cloud function: name, max memory limit, trigger, and source code.

Later on, you get the chance to define the handler/entrypoint (NodeJS function to invoke when the Cloud Function is hit); and other settings like the function deployment region and timeout (maximum running time per request).

Trigger options

Triggers can invoke your cloud function in response to external actions: active ones like HTTP requests or passive ones like events from Cloud Storage buckets or Cloud Pub/Sub topics.

In platforms like AWS this may not make much difference: Lambda configures and handles both event types in the same way. But in GCP they are handled quite differently; so different that the cloud function method signature itself is different.

If you try switching between the trigger types, you would see how the sample code under Source code changes:

HTTP functions

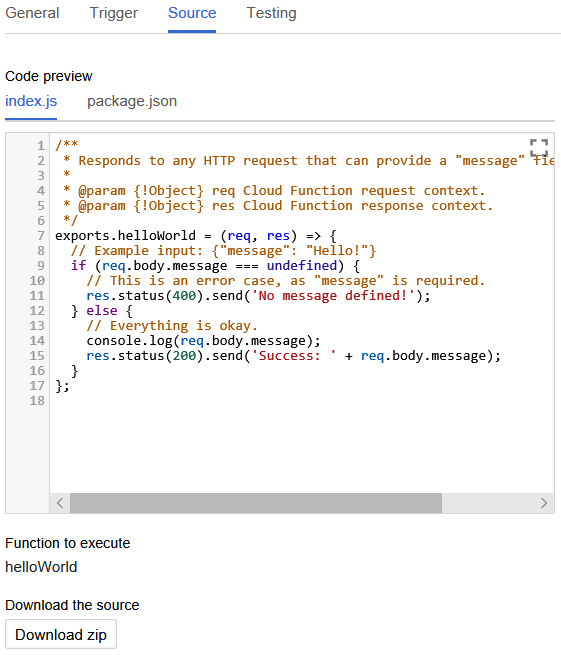

Google generates the signature:

/**

* Responds to any HTTP request that can provide a "message" field in the body.

*

* @param {!Object} req Cloud Function request context.

* @param {!Object} res Cloud Function response context.

*/

exports.helloWorld = (req, res) => {

// Example input: {"message": "Hello!"}

if (req.body.message === undefined) {

// This is an error case, as "message" is required.

res.status(400).send('No message defined!');

} else {

// Everything is okay.

console.log(req.body.message);

res.status(200).send('Success: ' + req.body.message);

}

};A HTTP function accepts a request and writes back to a response.

The HTTP(S) endpoint is automatically provisioned by Google, at https://{region}-{project-name}-gcp.cloudfunctions.net/{function-name}. So there is nothing more to configure in terms of triggers.

Event-based functions

Google's signature looks like:

/**

* Triggered from a message on a Cloud Pub/Sub topic.

*

* @param {!Object} event The Cloud Functions event.

* @param {!Function} The callback function.

*/

exports.subscribe = (event, callback) => {

// The Cloud Pub/Sub Message object.

const pubsubMessage = event.data;

// We're just going to log the message to prove that

// it worked.

console.log(Buffer.from(pubsubMessage.data, 'base64').toString());

// Don't forget to call the callback.

callback();

};Event-based functions accept an event and convey success/failure (and optionally a result) via a callback.

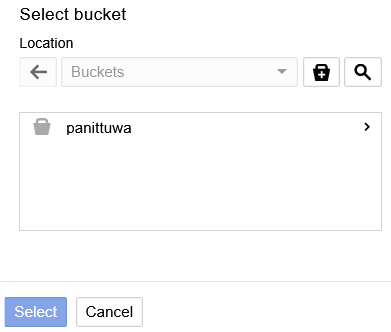

Here we need to configure an event source (Pub/Sub topic or Storage bucket) to trigger the function.

Good thing is, GCP allows you to pick an existing topic/bucket, or create a new one, right there inside the cloud function wizard page.

Picking an existing entity is just as easy:

Automatic retry

These non-HTTP functions also have a retry mechanism: you can configure GCP to redeliver an event back to the function automatically, if the function failed to process it during the last time.

This is good for taking care of temporary failures, but it can be dangerous if the error is due to a bug in your code: GCP will keep on retrying the failing event for up to 7 days, draining your quotas and growing your bill.

A function is born

Now we are done with the configurations; click Create.

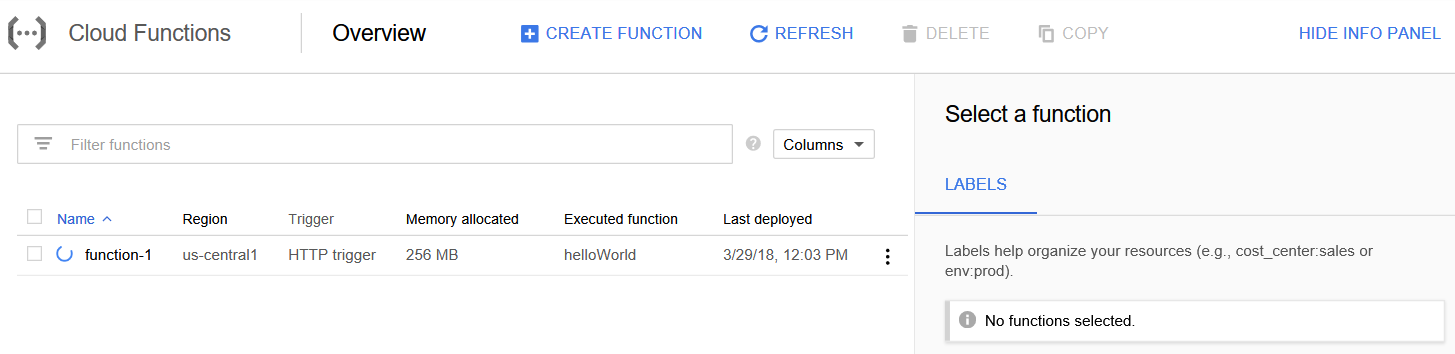

GCP takes you back to the function dashboard, where the new function will be listed; with a "pending" or "creating" status.

It may take a while, but finally you would see the successfully created function in Active status:

Back in the beta days, I got some sporadic errors when trying to create functions; for no apparent reason. Hopefully nobody is that much unlucky these days.

It is a good practice to label your functions, so you can find and manage them easily. GCP allows you to do this right from the dashboard.

Function actions

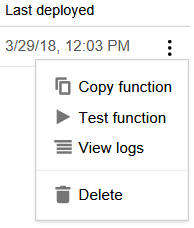

Click the three-dots button at the far right end of the function entry, to see what you can do next:

Back in the beta days, there were some hiccups with some of these options (like Copy Function; hopefully they are long gone now!

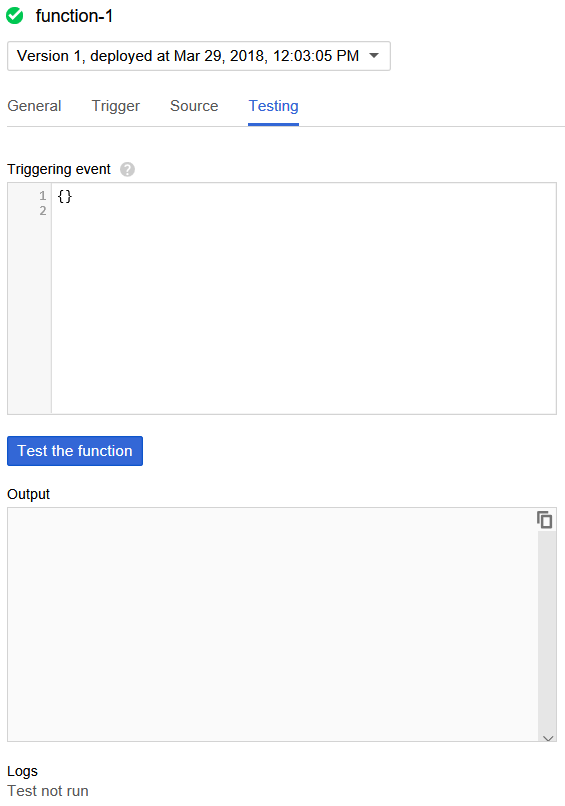

Testing

Test Function gives a nice interface where you can invoke the function with a custom payload, and view the output and execution logs right away. However it still lacks the ability to define and run predefined custom test events, like in AWS or Sigma. Another caveat is that the test invocations also hit the same production function instance (unlike, say, the test environment of Sigma), so they count towards the logs and statistics of your actual function.

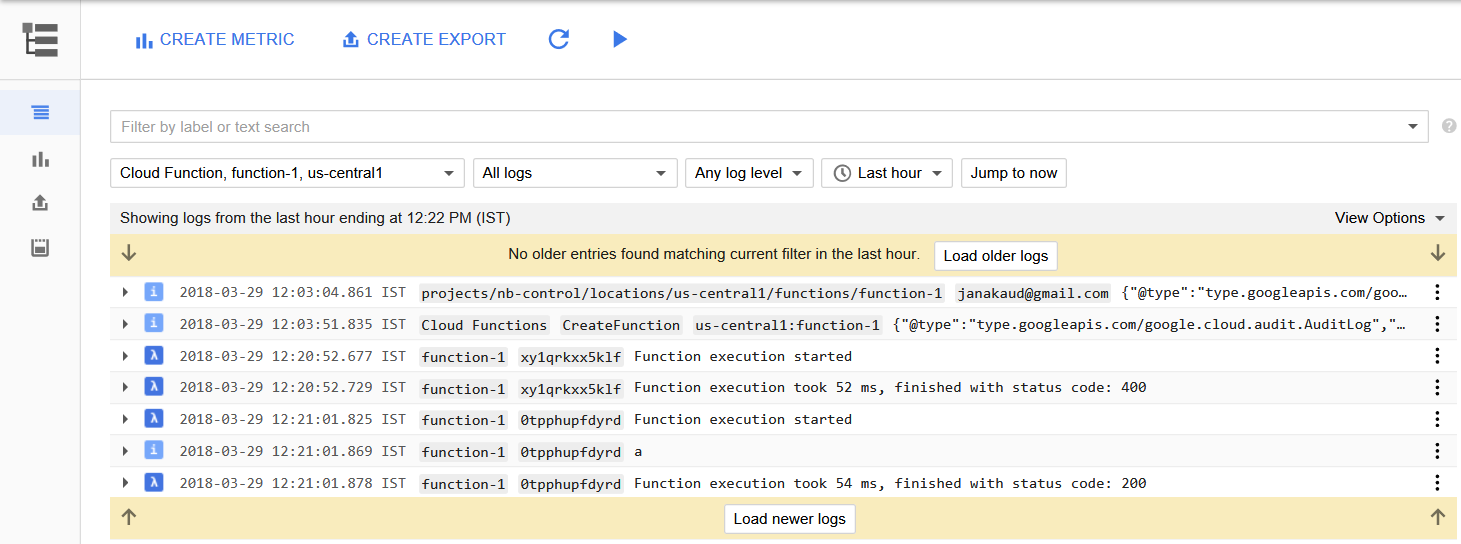

Logs

View Logs takes you to the familiar StackDriver Logging page, where you can browse, sort, search stream and do all sorts of things with the logs of your function. It takes a few seconds for the latest logs to appear, as is the case with other platforms as well.

More function details

You can click the function entry to see more details:

- General tab shows a nice stats graph, along with basic function configs like runtime, memory etc.

- Trigger tab shows the trigger config of the function.

As of now, GCP doesn't allow you to edit/change the trigger after you have created the function; we sincerely hope this would be relaxed in the future!

- Source tab has the familiar code viewer (although you cannot directly update and deploy the code from there). It also has a Download zip button for the code archive.

- Testing tab we have already seen. It's also pretty neat, for something that went GA just a few months ago.

So, that's what a cloud function looks like.

Or, to be precise, how it used to be - back in the pre- and post-beta days.

I'm sure Google will catch up on the serverless race - with more event sources, languages, monitoring and so forth.

But do we need to wait? Absolutely not.

Cloud Functions are mature enough for most of your routine integrations. One major bummer is that it doesn't yet support timer schedules, but folks are already using workarounds.

Plus, many of the leading serverless development frameworks are already supporting GCP!

So hop in - write your own serverless success story on GCP!

No comments:

Post a Comment