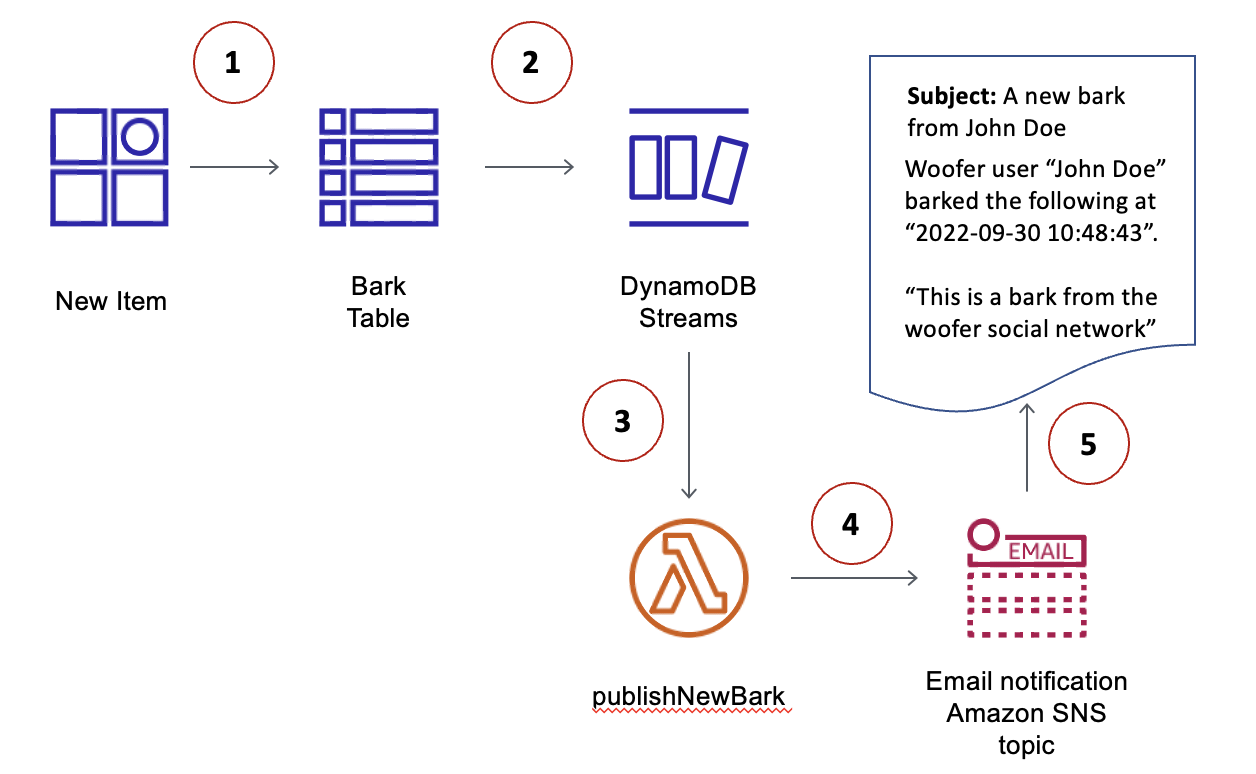

Recently we introduced two new AWS Lambda event sources (trigger types) for your serverless projects on Sigma cloud IDE: SQS queues and DynamoDB Streams. (Yup, AWS introduced them months ago; but we're still a tiny team, caught up in a thousand and one other things as well!)

While developing support for these triggers, I noticed a common (and yeah, pretty obvious) pattern on Lambda event source trigger configurations; that I felt was worth sharing.

Why AWS Lambda triggers are messed up

Lambda - or rather AWS - has a rather peculiar and disorganized trigger architecture; to put it lightly. For different trigger types, you have to put up configurations all over the place; targets for CloudWatch Events rules, integrations for API Gateway endpoints, notification configurations for S3 bucket events, and the like. Quite a mess, considering other platforms like GCP where you can configure everything in one place: the "trigger" config of the actual target function.

Configs. Configs. All over the place.

If you have used infrastructure as code (IAC) services like CloudFormation (CF) or Terraform (TF), you would already know what I mean. You need mappings, linkages, permissions and other bells and whistles all over the place to get even a simple HTTP URL working. (SAM does simplify this a bit, but it comes with its own set of limitations - and we have tried our best to avoid such complexities in our Sigma IDE.)

Maybe this is to be expected, given the diversity of services offered by AWS, and their timeline (Lambda, after all, is just a four-year-old kid). AWS should surely have had to do some crazy hacks to support triggering Lambdas from so many diverse services; and hence the confusing, scattered configurations.

Event Source Mappings: light at the end of the tunnel?

Luckily, the more recently introduced, stream-type triggers follow a common pattern:

- an AWS Lambda event source mapping, and

- a set of permission statements on the Lambda's execution role that allows it to analyze, consume and acknowledge/remove messages on the stream

- a service-level permission that allows the event source to invoke the function

This way, you know exactly where you should configure the trigger, and how you should allow the Lambda to consume the event stream.

No more jumping around.

This is quite convenient when you are based on an IAC like CloudFormation:

{

...

// event source (SQS queue)

"sqsq": {

"Type": "AWS::SQS::Queue",

"Properties": {

"DelaySeconds": 0,

"MaximumMessageSize": 262144,

"MessageRetentionPeriod": 345600,

"QueueName": "q",

"ReceiveMessageWaitTimeSeconds": 0,

"VisibilityTimeout": 30

}

},

// event target (Lambda function)

"tikjs": {

"Type": "AWS::Lambda::Function",

"Properties": {

"FunctionName": "tikjs",

"Description": "Invokes functions defined in \

tik/js.js in project tik. Generated by Sigma.",

...

}

},

// function execution role that allows it (Lambda service)

// to query SQS and remove read messages

"tikjsExecutionRole": {

"Type": "AWS::IAM::Role",

"Properties": {

"ManagedPolicyArns": [

"arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole"

],

"AssumeRolePolicyDocument": {

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"sts:AssumeRole"

],

"Effect": "Allow",

"Principal": {

"Service": [

"lambda.amazonaws.com"

]

}

}

]

},

"Policies": [

{

"PolicyName": "tikjsPolicy",

"PolicyDocument": {

"Statement": [

{

"Effect": "Allow",

"Action": [

"sqs:GetQueueAttributes",

"sqs:ReceiveMessage",

"sqs:DeleteMessage"

],

"Resource": {

"Fn::GetAtt": [

"sqsq",

"Arn"

]

}

}

]

}

}

]

}

},

// the actual event source mapping (SQS queue -> Lambda)

"sqsqTriggertikjs0": {

"Type": "AWS::Lambda::EventSourceMapping",

"Properties": {

"BatchSize": "10",

"EventSourceArn": {

"Fn::GetAtt": [

"sqsq",

"Arn"

]

},

"FunctionName": {

"Ref": "tikjs"

}

}

},

// grants permission for SQS service to invoke the Lambda

// when messages are available in our queue

"sqsqPermissiontikjs": {

"Type": "AWS::Lambda::Permission",

"Properties": {

"Action": "lambda:InvokeFunction",

"FunctionName": {

"Ref": "tikjs"

},

"SourceArn": {

"Fn::GetAtt": [

"sqsq",

"Arn"

]

},

"Principal": "sqs.amazonaws.com"

}

}

...

}(In fact, that was the whole reason/purpose of this post.)

Tip: You do not need to worry about this whole IAC/CloudFormation thingy - or writing lengthy JSON/YAML - if you go with a fully automated resource management tool like SLAppForge Sigma serverless cloud IDE.

But... are Event Source Mappings ready for the big game?

They sure look promising, but it seems event source mappings do need a bit more maturity, before we can use them in fully automated, production environments.

You cannot update an event source mapping via IAC.

For example, even after more than four years from their inception, event sources cannot be updated after being created via an IaC like CloudFormation or Serverless Framework. This causes serious trouble; if you update the mapping configuration, you need to manually delete the old one and deploy the new one. Get it right the first time, or you'll have to run through a hectic manual cleanup to get the whole thing working again. So much for automation!

The event source arn (aaa) and function (bbb) provided mapping already exists. Please update or delete the existing mapping...

Polling? Sounds old-school.

There are other, less-evident problems as well; for one, event source mappings are driven by polling mechanisms. If your source is an SQS queue, the Lambda service will keep polling it until the next message arrives. While this is fully out of your hands, it does mean that you pay for the polling. Plus, as a dev, I don't feel that polling exactly fits into the event-driven, serverless paradigm. Sure, everything boils down to polling in the end, but still...

In closing: why not just try out event source mappings?

Ready or not, looks like event source mappings are here to stay. With the growing popularity of data streaming (Kinesis), queue-driven distributed processing and coordination (SQS) and event ledgers (DynamoDB Streams), they will become ever more popular as time passes.

You can try out how event source mappings work, via numerous means: the AWS console, aws-cli, CloudFormation, Serverless Framework, and the easy-as-pie graphical IDE SLAppForge Sigma.

Easily manage your event source mappings - with just a drag-n-drop!

In Sigma IDE you can simply drag-n-drop an event source (SQS queue, DynamoDB table or Kinesis stream) on to the event variable of your Lambda function code. Sigma will pop-up a dialog with available mapping configurations, so you can easily configure the source mapping behavior. You can even configure an entirely new source (queue, table or stream) instead of using an existing one, right there within the pop-up.

When deployed, Sigma will auto-generate all necessary configurations and permissions for your new event source, and publish them to AWS for you. It's all fully managed, fully automated and fully transparent.

Enough talk. Let's start!