Originally written for The SLAppForge Blog; Feb 17, 2020

Monitoring your serverless application is crucial - especially while it is handling your production load. This brings us to today's topic, how to effectively monitor a serverless application.

Serverless = Ephemeral

Serverless environments are inherently ephemeral; once the execution completes, you don't have much left behind to investigate.

There's so much exciting talk about container reuse and keep-warm, but theoretically every single one of your function could be a cold-start. Even with reuse, you don't get access to the environment to analyze the previous invocation, until the next one comes in.

So, to effectively monitor a serverless system, we have to gather as much data as possible - while it is actually handling a request.

Monitoring the Ephemeral. The Dynamic. The Inaccessible.

Serverless offers the benefit of less management, through higher levels of abstraction; for obvious reasons, that comes hand in hand with the caveat of less visibility.

In short, you pretty much have to either:

- depend on what the serverless platform provider discloses to you, or

- write your own monitoring utilities (telemetry agents, instrumentations etc.) to squeeze out more metrics from the runtime

Log Analysis

Logs are the most common means of monitoring, auditing and troubleshooting traditional applications; not surprisingly, it holds true in serverless as well.

What's in a Log?

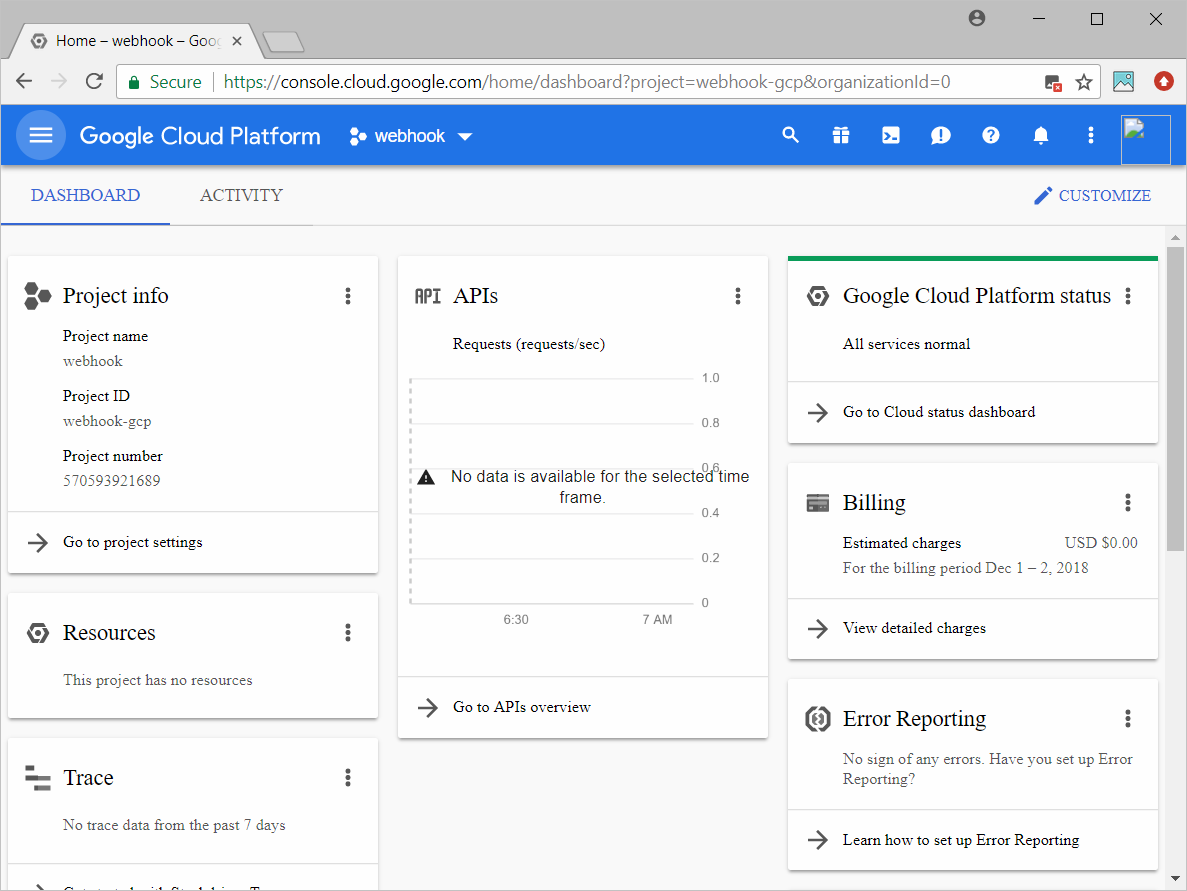

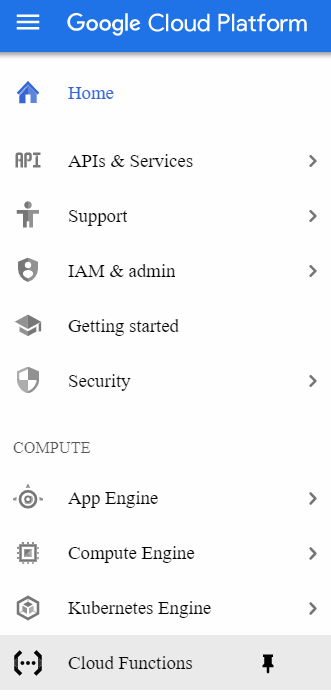

All major serverless platforms offer comprehensive logging for their FaaS elements: CloudWatch Logs for AWS Lambda, StackDriver Logging for Google Cloud Functions, Azure Monitor Logs for Azure Functions, CloudMonitor for Alibaba Cloud Functions, and so forth.

The logs usually contain:

- execution start marker, with a unique ID for each request/invocation

- all application logs generated during execution, up until the point of completion of the invocation

- execution summary: duration, resources used, billed quota etc.

Serverless is not just FaaS; and logging is not just about function executions. Other services like storage, data-storage and networking also provide their share of logging. While these are mostly associated with access and security auditing (e.g. CloudTrail auditing), they can still be merged with FaaS logs to enable more verbose, detailed monitoring.

Distributed Logging: A Nightmare?

One problem with the inherently distributed nature of serverless systems, is that these logs are usually scattered all over the place; unlike in a traditional monolith where all logs would be neatly arranged in one or a few well-known files.

Imagine your backend consists of five Lambdas, which the web client invokes in a particular sequence to get a job done - or are coordinated through Step Functions or Destinations; the logs for a single "call" could span five log streams in five log groups.

It may sound simple enough during development; but when your production app goes viral and starts receiving hundreds of concurrent calls, tracking a single client journey through the logs could become harder than finding a needle in a haystack.

Log Aggregation

This is where log aggregation services come into play. (In fact, serverless was lucky - because log management had already received a boost, thanks to microservice architecture.) Services like Coralogix and Dashbird will ingest your logs (via push or pull) and allow you to perform filters, aggregations, summarizations etc. as if they were from one or a few sources.

With visibility to long-term data, aggregation services can - and do - actually provide more intelligent outputs; such as real-time alerts on predefined error levels/codes, and stability- or security-oriented anomaly detection through pattern recognition, machine learning etc.

Resource Usage

Even with your application logic running like clockwork, the system could start failing if it is under-provisioned and runs out of resources; or become a budget-killer if over-provisioned.

Additionally, unusual patterns in resource usage may also indicate anomalies in your applications - such as attacks, misuse and other exploits.

What can we Measure?

- memory usage

- execution time or latency: given that FaaS invocations are under strict timeout constraints, time becomes a critical resource. You do not want your function to time-out before completing its job; but you also do not want it to remain hung indefinitely over a bad database connection that is going to take two minutes to time-out on its own.

- compute power used: in many platforms, allocated compute power grows in proportion to memory, so the product of allocated memory and execution time is a good relative measure for the total compute power consumed by the request. In fact, most platforms actually bill you by GB-seconds where GB refers to memory allocation.

Resource Isolation

Serverless invocations are isolated, which means one failure cannot affect another request. Sadly, it also means that each runtime instance should be able to handle the largest possible input/request on its own, as there is virtually no resource sharing across instances; one request handler cannot "borrow" memory from another, as is the case in monolithic apps with multiple services on the same runtime/VM.

This is bad in a sense, that it denies you of the luxury to maintain shared resources (e.g. connection pools, memory-mapped file caches, etc.) to be pooled across requests. But at the same time, it means that managing and monitoring per-request resources becomes easier; each time you allocate or measure it, you are doing it for a single request.

From Logs

As mentioned before, serverless logs usually contain execution summaries stating the allocated vs. maximum memory usages, and exact vs. billed execution time.

From Runtime Itself

Your application runs as a Linux process, so it is always possible to grab resource usage data from the runtime itself - either via standard language/runtime calls (e.g. NodeJS process.memoryUsage()) or by directly introspecting OS-level semantics (e.g. /proc/self/stat). Some aspects like execution time are also provided by the serverless runtime layer - such as remaining time via context.getRemainingTimeInMillis() on Lambda.

From Serverless Platform Metrics

Most platforms keep track of resource metrics themselves. AWS CloudWatch Metrics is perhaps the best example.

While CloudWatch does not yet offer memory or compute graphs, third party tooling like SigmaDash can compute them on your behalf.

Platforms usually provide better metrics for non-FaaS systems than they do for FaaS; probably because of the same challenges they face in accurately monitoring the highly dynamic environment. But they are constantly upgrading their techniques, so we can always hope for better.

For non-compute services like storage (e.g. AWS S3), persistence (e.g. AWS DynamoDB) and API hosting (e.g. AWS API Gateway), platforms offer detailed metrics. The upside with these built-in metrics is that they offer different granularity levels, and basic filtering and summarization, out of the box. The downside, often, is that they are fairly non-real-time (generally several seconds behind on the actual request).

Also, for obvious reasons, longer analysis periods mean lesser granularity. Although you can request for higher-precision data, or report custom metrics at desired precisions, it will cost you more - and, at serverless scales, such costs can add up fairly quickly.

Invocations, Errors and Throttles

While logs and resource usages can reveal anomalies during execution, these depict more straightforward serverless monitoring metrics related to the actual end results of application invocations. In that sense, resource monitoring can be treated as oriented more towards performance, whereas error/throttle monitoring leans more towards correctness, stability and scalability.

You can usually grab these numbers from the built-in metrics of the platform itself.

Invocations-to-error Ratio

Similar to signal-to-noise ratio (SNR), this is a measure of how "efficient" or "robust" your application is; the higher it is, the more requests your application can successfully serve without running into an error.

Of course, the validity of this serverless monitoring metric depends on the actual nature and intent of your code, as well as the nature of inputs; if you receive lots of erratic or failure-prone inputs, and you are actually supposed to fail the invocation in such cases, this value would be naturally high.

Throttles

Presence of throttles could indicate one of a few things:

- You have (perhaps mistakenly) throttled your function (or the API/interface) below its regular concurrency limit.

- Your application is under a denial-of-service (DoS) attack.

- Your application's scalability goes beyond what the serverless platform can occur (maybe it has gone viral); you need to re-architect it to batch-up invocations, introduce back-offs to safely deal with rejections, etc.

Note that throttling issues are not always limited to front-facing elements; if you are asynchronously triggering a function from an internal data stream (e.g. a Lambda with a Kinesis trigger) you could run into throttling, based on the dynamics of the rest of the application.

Also, throttling is not always in front of FaaS. If a third-party API that your application invokes (e.g. Amazon's own seller APIs) gets throttled, you could run into issues even without an apparent increase in the fronting traffic, events or invocation counts. Worse, it could lead to chain reactions or cascading failures - where throttling of one service can flow down the execution path to others, and eventually to the user-facing components.

Once you are on the upper ends of scalability, only a good combination of API knowledge, fail-safe measures and serverless monitoring techniques - logs, throttles and runtime latency measurements - can save you from such scenarios.

Instrumenting

So far, all serverless monitoring techniques we discussed, have been dependent on what the runtime and platform offer by default, or out-of-shelf. However, people find these inadequate for monitoring serious production applications where:

- service health and performance are critical,

- early detection of anomalies and threats is vital, and

- alerts and recovery actions need to be as fine-grained and real-time as possible.

To get beyond what the platform offers, you usually need to instrument your runtime and grab additional serverless monitoring insights. This can be via:

- wrapper code and/or additional libraries (e.g. SignalFX or

lambda-monitor), - installation of metrics-reporter agents (e.g. New Relic APM), or

- modification of the runtime itself (e.g. via layers or custom runtimes, as done by IOpipe, DataDog and Thundra).

For FaaS, this obviously comes with implications on performance - your function runtime now has to spare some cycles to gather and report the metrics; in many cases, the tools also require you to modify your application code. Often these changes are subtle enough - such as importing a library, calling a third-party method, etc.; nevertheless, it goes against the developers' and dev-ops' pipe dream of code-free monitoring.

Realizing the demand for advanced serverless monitoring and diagnostics, cloud platforms themselves have also come forth with instrumentation options:

- AWS X-Ray ships with Lambda by default, and can be enabled via a simple configuration with no code change. Once enabled, it can report the latencies of different invocation phases: initialization, individual AWS API/SDK calls, etc. With a bit of custom code it can also capture calls to any downstream HTTP service - which can come in handy when monitoring and troubleshooting latency issues.

- Google Cloud Functions offers StackDriver Monitoring for advanced analytics via built-in timing, invocation and memory metrics; as well as custom metrics reporting and alerting.

- Azure Functions has its own Azure Application Insights service offering performance analytics .

However, the best work related to instrumentation have come from third-party providers, such as the ones mentioned earlier. These usually require you to subscribe to their services, configure your serverless platforms to report metrics to theirs, and provide analysis results and insights intelligence through their own dashboards.

Serverless Monitoring, up against Privacy and Security Concerns

The obvious problem with delegating serverless monitoring responsibilities to third parties, is that you need to guarantee the safety of the data you are sharing; after all, it is your customers' data.

Even if you implicitly trust the third party, you need to take precautions to minimize the damage in case the monitoring data gets leaked - for the sake of your users as well as the security and integrity of your application.

- Anonymizing and screening your logs for sensitive content,

- using secure channels for metrics sharing, and

- always sticking to the principle of least privilege during gathering, sharing and analysis of data

will get you started on a robust yet safe path to efficiently monitoring your serverless application.